Table of Contents

Understand the Docker Architecture with TheSecMaster

This is our second article about Docker. We covered most of the basic information about Docker and container technology in a different blog post. Please make sure you read the post “Understand Docker Containers With TheSecMaster” if you haven’t read it yet. In this post, we will delve deeper into the architecture of Docker and understand how it works under the hood. Let’s start learning about the Docker architecture on thesecmaster.com.

Components of Docker

Docker is a popular open-source tool designed to facilitate the creation, deployment, and execution of applications using containers. Containers allow developers to bundle an application with all its necessary parts, such as libraries and dependencies, and distribute it as a single package. Docker requires multiple components to function properly. Before we dive into the Docker Architecture, let’s familiarize ourselves with the different components of Docker.

Docker Engine: The Docker Engine is the base layer of the Docker architecture. It’s a lightweight runtime that builds and runs your Docker images. The Docker Engine includes the Docker CLI (Command Line Interface), API, and the Docker daemon.

Docker Images: Docker images are the basis of containers. An Image is an ordered collection of root filesystem changes and the corresponding execution parameters for use within a container runtime. This is a lightweight, stand-alone, executable package that includes everything needed to run a piece of software, including the code, a runtime, libraries, environment variables, and config files.

Docker Containers: Docker containers are a runtime instance of a Docker image. In other words, it’s the execution of Docker image. It is a lightweight and portable encapsulation of an environment in which to run applications.

Docker File: Docker can build images automatically by reading the instructions from a Dockerfile. It’s a text file that contains all the commands, in order, needed to build a given image.

Docker Compose: Docker Compose is a tool for defining and running multi-container Docker applications. With Compose, you use a YAML file to configure your application’s services.

Docker Hub/Registry: Docker Hub is a cloud-based registry service which allows you to link to code repositories, build your images and test them, store manually pushed images, and link to Docker cloud so you can deploy images to your hosts.

Docker Daemon: The Docker daemon is what actually executes commands sent to the Docker Client — like building, running, and distributing your containers.

Docker Client: The Docker Client is the primary user interface to Docker. It accepts commands from the user and communicates back and forth with a Docker daemon.

These components work together to provide a cohesive and powerful toolset for containerization. They make it possible to isolate applications in their environments, ensuring that they run consistently across different systems.

Architecture of Docker

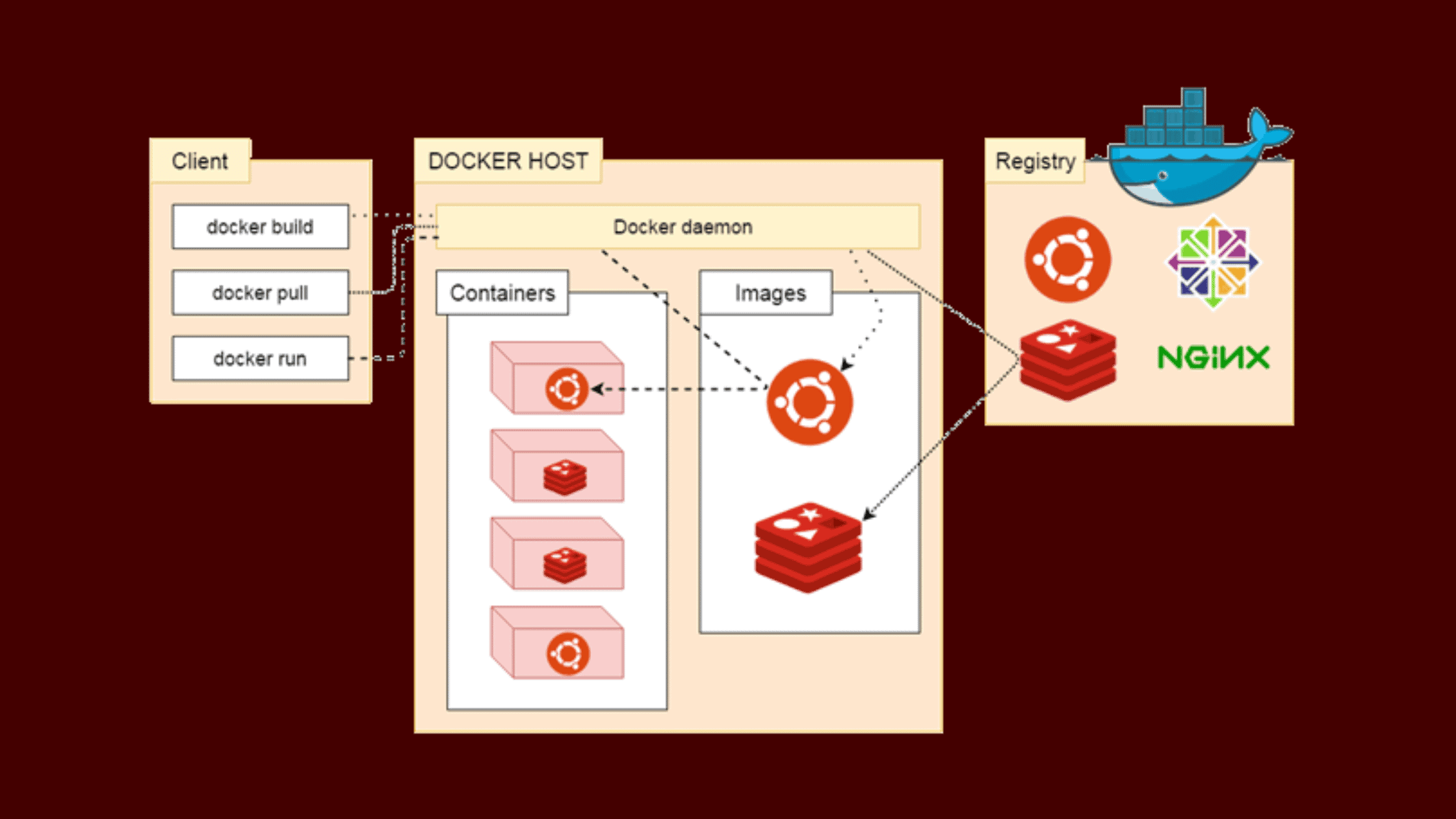

Collectively, Docker composed of multiple components. All its components are grouped into three major components. Docker Client, Docker Host, and Docker Registry. Let’s learn about all of them.

Image Source: javatpoint.com

Docker Client

The Docker Client, often referred to just as “docker”, is the primary user interface to Docker. It’s a command-line interface (CLI) that allows users to interact with Docker daemons, which do the actual work of building, running, and managing Docker containers.

The Docker client and daemon can run on the same host, or they can communicate over a network. By default, a Docker client communicates with the Docker daemon running on the same host machine, but it can also be configured to talk to Docker daemons on different hosts.

Here are some key Docker client commands:

docker run: This command is used to create and start a Docker container from a Docker image.

docker build: This command is used to build a new Docker image from a Dockerfile and a “context”. The build’s context is the set of files located in the specified PATH or URL.

docker pull: This command is used to pull a Docker image from a Docker registry. If no specific version is mentioned, it pulls the latest version.

docker push: This command is used to push an image to a Docker registry.

docker stop: This command is used to stop a running Docker container.

Remember, every command sent from the Docker client is actually executed by the Docker daemon. The client is just the user interface to the daemon.

Docker Host

Docker Host refers to a runtime environment that runs the Docker daemon, containers, and images. Essentially, a Docker host is any machine that has Docker installed and running on it. Docker host is responsible for executing the instructions sent by the Docker client and managing Docker objects.

Here are the major components in a Docker Host:

Docker Daemon (dockerd): The Docker Daemon runs on the Docker host machine and listens to Docker API requests. It handles Docker objects such as images, containers, networks, and volumes. Also, it manages Docker services.

Docker Images: These are read-only templates with instructions for creating Docker containers. The Docker host manages these Docker images.

Docker Containers: These are runnable instances of Docker images. A Docker container is a lightweight, stand-alone, and executable software package that includes everything needed to run a piece of software, including the code, runtime, libraries, environment variables, and config files.

Docker Networks and Storage: Docker Daemon also manages networking and storage for containers. Docker networking allows containers to interact with each other and with the outside world, while Docker storage allows data persistence and data sharing across containers.

In terms of architecture, multiple Docker hosts can be clustered together in a Docker Swarm, providing the ability to create a fault-tolerant, distributed system for high availability of services. In a Swarm, one node acts as a manager node, which orchestrates and schedules containers, while the rest are worker nodes that run the containers.

Docker Registry

A Docker Registry is a repository for Docker images. It stores Docker images and allows you to distribute them across different environments. Docker Registries can be public or private.

Two main public Docker registries are Docker Hub and Docker Cloud. Docker Hub is the default registry where Docker looks for images. Anyone can publish their own Docker images to Docker Hub, or use images published by others. Docker Cloud provides similar functionality and is also provided by Docker Inc.

Private Docker Registries are usually used within organizations where images are proprietary and are not to be shared publicly. Companies use private Docker registries to manage and distribute their Docker images within their infrastructure securely.

Here’s how it works:

docker pull: When you use the docker pull command, Docker downloads the Docker image from the Docker registry to your local system.

docker push: When you use the docker push command, Docker pushes your Docker image to the specified Docker registry. For this, you’ll need to have rights to push to the registry, which usually means you need to be authenticated.

docker run: When you use the docker run command, Docker first checks if the Docker image is available on your local system. If it’s not, Docker will pull it from the Docker registry before creating the container.

In short, a Docker registry is a crucial component in the Docker architecture, playing an essential role in distributing Docker images. It provides a centralized resource for managing, distributing and deploying Docker images.

Docker Operational Workflow

You will have to go through several stages to complete a Docker Workflow, from the development of an application to its deployment. The following are the main steps of the Docker workflow:

Creating the Docker file: The Docker workflow begins by creating a Dockerfile. A Dockerfile is a script containing a collection of instructions and commands which are used to create a Docker image. It includes all the necessary information for running an application – the base image, the software dependencies, the application code, environment variables, and the commands for running the application.

Building the Docker Image: Once the Dockerfile is prepared, the next step is building the Docker image. The Docker image is created by running the docker build command. This command reads the Dockerfile, executes the instructions, and then returns a Docker image. This image includes everything that will be needed to run an application, including the runtime environment, application code, libraries, and environmental settings.

Testing the Docker Image: After the Docker image has been built, it’s usually good practice to test it. This can involve running unit tests, integration tests, or other checks to ensure that the Docker container will behave as expected.

Pushing Docker Image to a Registry: After testing, the image is then pushed to a Docker registry. A Docker registry is a repository for Docker images. Docker Hub is a public registry that anyone can use, and Docker is configured to look for images on Docker Hub by default. You can also host your own registry.

Pulling and Running the Docker Image from a Registry: The final step of the Docker workflow involves pulling the Docker image from the registry and running it on the desired host. This can be a local machine, a virtual machine in a cloud, or a dedicated server. This is done using the docker run command.

Remember, Docker containers are lightweight and portable, so they can run on almost any machine without the need for a hypervisor, thereby allowing efficient use of the underlying machine.

This Docker operational workflow forms the basis of what is called “containerization,” and it has become a popular choice for software deployment. It simplifies many challenges of software development, such as handling dependencies, environment consistency, and application isolation.

We hope this article helps Understand Docker architecture, its components, and operational workflow. We are going to end this post for now, we will cover more information about the Docker in the up coming articles. Please keep visiting thesecmaster.com for more such technical information. Visit our social media page on Facebook, Instagram, LinkedIn, Twitter, Telegram, Tumblr, & Medium and subscribe to receive information like this.

You may also like these articles:

Arun KL

Arun KL is a cybersecurity professional with 15+ years of experience in IT infrastructure, cloud security, vulnerability management, Penetration Testing, security operations, and incident response. He is adept at designing and implementing robust security solutions to safeguard systems and data. Arun holds multiple industry certifications including CCNA, CCNA Security, RHCE, CEH, and AWS Security.