Table of Contents

Configuring Splunk Universal Forwarder and Indexer to Monitor Log Files

Welcome back, fellow Splunk enthusiasts! In our previous articles, we covered the essential steps of creating indexes, configuring receivers and forwarders on a Splunk Indexer instance, and deploying the Universal Forwarder on Linux and Mac machines. Now, it's time to bring all this information together and learn how to configure the Universal Forwarder and Indexer to send logs from a log source.

Splunk Universal Forwarder supports various data input streams, including files and folders, TCP/UDP, Syslog, HTTP Event Collection, REST APIs, scripts, and more. In this article, we will focus on the configuration required to read logs from files or folders.

By the end of this article, you will have a solid understanding of how to set up the Universal Forwarder and Indexer to effectively monitor log files, enabling you to unlock valuable insights from your data.

Lab Setup

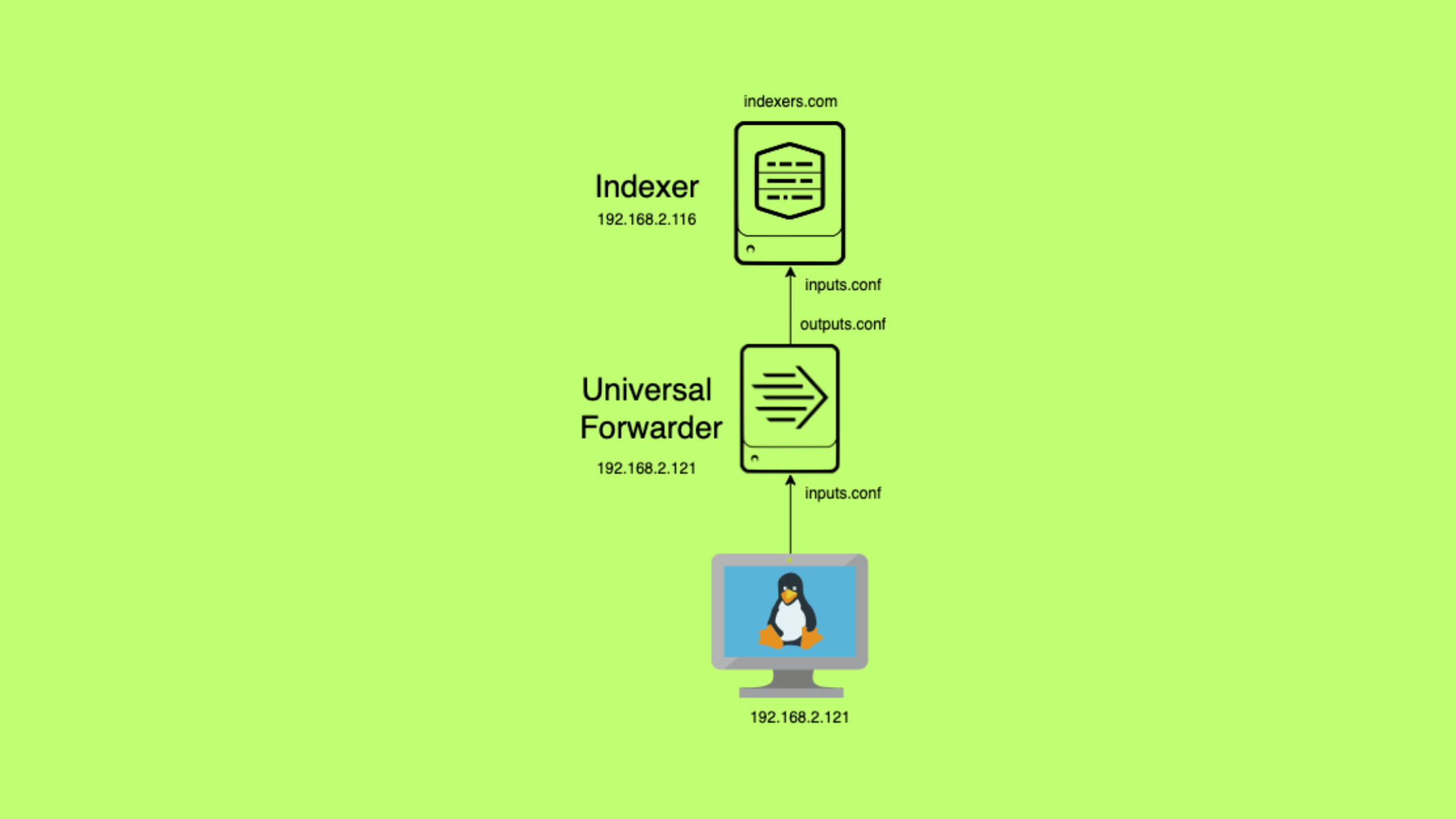

For this tutorial, we have a simple lab setup consisting of two machines: a Mac and a Linux machine. The Splunk Indexer instance is deployed on the Mac, which will act as the Indexer, while the Universal Forwarder is installed on the Linux machine, which serves as our log source.

As depicted in the diagram above, the Linux machine generates logs that we want to monitor and analyze using Splunk. The Universal Forwarder installed on the Linux machine will be responsible for collecting these logs and forwarding them to the Splunk Indexer running on the Mac.

Throughout this article, we will guide you through the process of configuring the Universal Forwarder on the Linux machine to read logs from specific files or folders and send them to the Splunk Indexer on the Mac. By following the steps outlined in this tutorial, you will gain hands-on experience in setting up a basic Splunk architecture for log monitoring and analysis.

Configuration Overview

Before we dive into the hands-on configuration of the Universal Forwarder and Indexer instances, let's take a moment to understand the key configuration files involved in the process. This overview will give you a clear picture of what we'll be setting up on each component.

Universal Forwarder Configuration

On the Universal Forwarder (UF) instance, we will configure two essential files:

1. inputs.conf: This file is used to specify the log sources that the Universal Forwarder should monitor and collect logs from. In our lab setup, we will configure the UF to monitor the Apache access logs stored in the /var/log/apache directory on the Linux machine.

2. outputs.conf: This file is used to define where the collected logs should be forwarded to. We will configure the UF to send the logs to the Splunk Indexer running on the Mac machine. The outputs.conf file will contain the IP address and port number of the Indexer to establish the connection.

Indexer Configuration

On the Splunk Indexer instance, which is the recipient of the logs forwarded by the Universal Forwarder, we will configure two key files:

1. inputs.conf: This file is used to configure the Indexer to listen for incoming data from the Universal Forwarder. We will specify the port number (9997) on which the Indexer should listen for the forwarded logs.

2. indexes.conf: This file is used to define the index where the received logs will be stored and indexed. In our lab setup, we will create an index named "apache" to store the Apache access logs forwarded by the Universal Forwarder.

It's important to note that all of these configurations will be done within the context of an app named "apache_logs". Creating a dedicated app for our Apache log monitoring setup allows us to keep the configurations organized and separate from other Splunk configurations. The "apache_logs" app will be created on both the Universal Forwarder and Indexer instances, and the respective configuration files (inputs.conf, outputs.conf, and indexes.conf) will be placed within the app directory.

By configuring these files on the Universal Forwarder and Indexer instances, we establish a pipeline for collecting logs from the Linux machine, forwarding them to the Indexer, and storing them in a dedicated index for further analysis.

With this configuration overview in mind, let's proceed to the hands-on steps of setting up the Universal Forwarder and Indexer in the next sections.

Recommendations from the author: Read these articles before you start this article.

Configuring the Universal Forwarder for Log Monitoring and Forwarding

In this section, we will guide you through the process of configuring the Universal Forwarder on the Linux machine to monitor the Apache logs stored in the /var/log/apache directory and forward them to the Splunk Indexer running on the Mac. We will create an app named "apache_logs" and set up the inputs.conf and outputs.conf files to achieve this.

Note: Well, you can do all the configurations on system/local directory. However, it is a good practice to test configurations creating an app to keep the setup separate and clean. We are using a test app named 'apache_logs' through out this demo.

Step 1: Create the "apache_logs" App Directory

SSH into the Linux machine where the Universal Forwarder is installed.

Navigate to the Splunk Universal Forwarder's apps directory:

cd /opt/splunkforwarder/etc/appsCreate a new directory named "apache_logs":

sudo mkdir apache_logsChange the ownership of the "apache_logs" directory to the "splunk" user:

sudo chown -R splunk:splunk apache_logsWe published dedicated articles on managing Apps and Add-ons, we recommend to refer for more detail.

Step 2: Configure the Inputs.conf File

Navigate to the "apache_logs" app directory:

cd apache_logsCreate a new directory named "local":

sudo mkdir localChange to the "local" directory.

Create a new file named inputs.conf:

sudo nano inputs.confAdd the following configuration to monitor the Apache log:

[monitor:///var/log/apache]

disabled = 0

index = security

sourcetype = apache_access

host_segment = 3This configuration instructs the Universal Forwarder to monitor the /var/log/apache directory, assign the logs to the "apache" index, set the source type to "apache_access", and use the third segment of the directory path as the host value.

Save the changes and exit the text editor.

We published a dedicated articles on configuring inputs.conf file, we recommend to refer for more detail.

Step 3: Configure the outputs.conf File

In the same "local" directory, create a new file named outputs.conf:

sudo nano outputs.confAdd the following configuration to forward the logs to the Splunk Indexer:

[tcpout]

defaultGroup = indexers

[tcpout:indexers]

server = 192.168.2.116:9997Replace <indexer_ip> with the IP address of your Splunk Indexer running on the Mac.

Save the changes and exit the text editor.

The [tcpout] stanza defines the default settings for forwarding data over TCP. The defaultGroup setting specifies the name of the group of indexers to which the logs will be forwarded. In this case, the group is named "indexers".

The [tcpout:indexers] stanza defines the settings for the "primary_indexers" group. The server setting specifies the IP address and port of the Splunk Indexer to which the logs will be forwarded. Replace <indexer_ip> with the actual IP address of your Splunk Indexer running on the Mac. The port number, 9997, is the default port used for receiving data from forwarders.

Step 4: Restart the Splunk Universal Forwarder

Restart the Splunk Universal Forwarder to apply the new configuration:

sudo /opt/splunkforwarder/bin/splunk restartWait for the restart to complete.

With these steps, you have successfully configured the Universal Forwarder on the Linux machine to monitor the Apache logs stored in the /var/log/apache directory and forward them to the Splunk Indexer running on the Mac.

Note: We don't want to start the Universal Forwarder services until we configure the Indexer to receive and store the logs. We will restart the UF services upon setting up the Indexer that we will do in the next section.

Configuring the Indexer for Log Receiving

Now that we have the Universal Forwarder set up and ready to send logs, it's time to configure the Splunk Indexer to receive and store these logs. In this section, we will guide you through the process of setting up the indexes.conf and inputs.conf files under an app named "apache_logs" on the Indexer.

Step 1: Create the "apache_logs" App

Let's start configuring Indexer from creating an App named "apache_logs" as we did in Universal Forwarder. Well, we have a dedicated tutorial on managing Apps and Add-Ons in that we showed creating Apps and Add-Ons from both Web UI and CLI windows. You can follow any method to create the App.

Log in to the Splunk Indexer's web interface.

Navigate to the "Apps" section and click on "Create app".

Name the app "apache_logs" and provide a description (optional).

Click "Save" to create the app.

We published dedicated articles on managing Apps and Add-ons, we recommend to refer for more detail.

Step 2: Configure the indexes.conf File

SSH into the Splunk Indexer machine.

Navigate to the "apache_logs" app directory:

On Linux:

cd /opt/splunk/etc/apps/apache_logs/localOn Mac

cd /Applications/splunk/etc/apps/apache_logs/local Create or edit the indexes.conf file using a text editor (e.g., nano):

sudo nano indexes.confAdd the following stanza to define the "security" index:

[security]

homePath = $SPLUNK_DB/security/db

coldPath = $SPLUNK_DB/security/colddb

thawedPath = $SPLUNK_DB/security/thaweddbSave the changes and exit the text editor.

Verify from the Web UI console.

Note: If you don't see the index on the Web UI console. Reload the configuration visiting this URL: "https://splunk_server:8000/debug/refresh". We covered creating Indexes in detail on a different article. We recommend to refer for more details.

Step 3: Configure the inputs.conf File

In the same "apache_logs" app directory, create or edit the inputs.conf file.

Add the following stanza to configure the Indexer to listen for incoming traffic from the Universal Forwarder on port 9997:

sudo nano inputs.conf[splunktcp://9997]

disabled =0Save the changes and exit the text editor.

You should see a Receiver configured on Web UI. If not, reload the configuration visiting this URL: "https://splunk_server:8000/debug/refresh".

We published a dedicated articles on configuring indexes.conf file, we recommend to refer for more detail.

Step 4: Restart the Splunk Indexer

Restart the Splunk Indexer to apply the new configuration:

On Linux:

sudo /opt/splunk/bin/splunk restartOn Mac:

/Applications/splunk/bin/splunk restartWait for the restart to complete.

That's it. If all the configurations are correct and no network connection issues between the Indexer and the Universal Forwarder, you should see apache logs on your Splunk Search Head.

In our case, we didn't see any logs on Splunk Search App. It's time to troubleshoot the problem.

Troubleshoot the Logs

If you have followed the previous steps but still do not see any logs in Splunk like in our case, there are a few troubleshooting steps you can take to identify and resolve the issue. Let's troubleshoot one after anlther.

Step 1: Verify Indexer Listening Port

The first step in troubleshooting is to ensure that the Splunk Indexer is properly listening on the designated port (9997 in our case). To check this, run the following command on the Indexer machine.

lsof -PiTCP -sTCP:LISTENThis command will list all the processes listening on TCP ports. Look for an entry with port 9997 to confirm that the Indexer is listening on the correct port.

Step 2: Check if the Universal Forwarder can Connect to the Indexer on port 9997

Use the telnet command to test the connection between the Universal Forwarder and the Indexer:

telnet <indexer_ip> 9997If you see any problem in network connection. Troobleshoot your network, check if there are any blocks in firewall or other internal network devices.

Step 3: Clear the Fishbucket

If your network connection is good, the next step you should try troubleshooting clearing the Fishbucket. Fishbucket contains checkpoint information for all the files that are going to be monitored for data input. Fishbucket files are stored in '/opt/splunkforwarder/var/lib/splunk/' directory.

cd /opt/splunkforwarder/var/lib/splunk/Clear the Fishbucket. Before that you should stop Universal Forwarder service:

sudo /opt/splunkforwarder/bin/splunk stopsudo /opt/splunkforwarder/bin/splunk statusRun this command to clear index _thefishbucket:

sudo /opt/splunkforwarder/bin/splunk clean eventdata -index_thefishbucketIf the above command doesn't help clearing Fishbucket, manually delete all the directories and files underneath fishbucket directory.

sudo rm -R /opt/splunkforwarder/var/lib/splunk/fishbucket/Start the Universal Forwarder service again. UF create the Fishbucket and read the log file once again from the beginning.

sudo /opt/splunkforwarder/bin/splunk startsudo /opt/splunkforwarder/bin/splunk statusThe Universal Forwarder recreated the Fishbucket directory and read the log files once again.

Wait for a few seconds after starting the Universal Forwarder, then search for logs on the Splunk Search Head. If the troubleshooting steps were successful, you should now see logs appearing in the search results as like in our case.

This is how you should be able to identify and resolve any issues preventing logs from appearing in Splunk. Remember to check the Indexer port, test the connection, clear the Fishbucket, and restart the Universal Forwarder to ensure that logs are being properly forwarded and indexed.

We hope this article helps understand how to set up the Universal Forwarder and Indexer to effectively monitor log files.

That's all for now, we will cover more information about the Splunk in the up coming articles. Please keep visiting thesecmaster.com for more such technical information. Visit our social media page on Facebook, Instagram, LinkedIn, Twitter, Telegram, Tumblr, & Medium and subscribe to receive information like this.

You may also like these articles:

Arun KL

Arun KL is a cybersecurity professional with 15+ years of experience in IT infrastructure, cloud security, vulnerability management, Penetration Testing, security operations, and incident response. He is adept at designing and implementing robust security solutions to safeguard systems and data. Arun holds multiple industry certifications including CCNA, CCNA Security, RHCE, CEH, and AWS Security.