Table of Contents

How to Turn Your Raspberry Pi into an AI ChatBot Server?

Have you ever wondered if you could run advanced AI models like ChatGPT locally on a small single-board computer? Well, wonder no more – with the power of Alpaca.cpp and the new Raspberry Pi 5, you absolutely can!

This post is going to be an unique post. Because, we are going to show you how to run a ChatGPT like model locally on a tiny computer like Raspberry Pi and eventually turn your raspberry Pi into an AI ChatBot server. Well, if you are afraid that you don’t have Raspberry Pi. You can try this on any computer: Windows, Linux, or Mac. You non need to stick on the Raspberry Pi. Now, you may ask what is unique about this post. The most unique thing is, this project allows you to run Large Language Models on a small computers like Raspberry Pi.

LLMs demand a huge amount of computational resources. Computers with i9 chip with more then 32 GBs of RAM struggles to run a small 3-4B LLM models. If that is the case, how could you run a ChatGPT like model locally on a tiny computer like Raspberry Pi. The answer lice in Alpaca project. Scrowll down to see the details in this blog post.

Introduction to Alpaca – An Instruction-Following LLaMA Model

Alpaca is an open source project from researchers at Stanford University that aims to build an AI assistant that can understand instructions and engage in helpful dialogs. It combines the LLaMA foundation model developed by Meta AI with a fine-tuned variant called Stanford Alpaca – optimized specifically to follow natural language instructions closely, similar to capabilities seen in ChatGPT.

The Alpaca project is helmed by graduate students Rohan Taori, Ishaan Gulrajani, Tianyi Zhang, Yann Dubois and Xuechen Li, advised by leading AI researchers like Percy Liang and Daniel Khashabi.

The key insight enabling this work is that while large language models like LLaMA have impressive fluency and semantic understanding, they do not reliably follow verbal guidance without explicit optimization. By taking Meta’s efficient LLaMA architecture as the base, and adapting state-of-the-art reinforcement learning techniques to judiciously fine-tune it for obeying instructions, the resulting “Alpaca” model retains wide knowledge while sharply aligning behaviors to user needs.

To train Alpaca, over 52K high-quality demonstration examples were algorithmically synthesized using OpenAI’s davinci-003 as an unwitting assistant. This affordable data collection process allowed capable instruction-following to emerge without expensive human labeling efforts.

The trained Alpaca model exhibits conversational ability akin to chatbots like Character.ai and Claude, while running fully offline on regular consumer hardware thanks to LLaMA’s efficient design. Easy integrations into projects like alpaca.cpp also make this technology widely accessible.

How Does Alpaca Works?

At a high level, Alpaca builds on the core knowledge and language capabilities already present in the LLaMA foundation model developed by Meta AI. LLaMA contains extensive pretraining on diverse corpora, allowing it to understand semantics, reason about concepts, and generate fluent text responsively.

However, LLaMA by itself lacks robustness in precisely following instructions and aligning outputs to user needs. This is where the additional “Alpaca” tuning comes into play. The Alpaca fine-tuning applies reinforcement learning techniques to further specialize LLaMA’s behaviors for obeying textual instructions. Mathematically, this changes the model’s internal weightings to boost probabilities of responses that satisfy provided directions.

Image Source: Stanford Alpac

Data Generation

The key innovation allowing affordable and scalable data collection was using OpenAI’s powerful davinci-003 model in a creative fashion for generating training examples.Specifically, the Alpaca researchers devised a smart prompt design which instructs davinci-003 to provide a diverse set of 52,000 unique task descriptions, coupled with appropriate context and responses.

This converted a costly manual labeling effort into a rapid automated one by strategically utilizing davinci-003’s existing capabilities. The prompts are carefully designed to elicit a wide variety of instructions spanning different levels of complexity and modalities.

This ultimately yielded a high-quality Supervised training corpus for just a few hundred dollars – orders of magnitudes cheaper than typical approaches while retaining diversity.

Fine-Tuning

With the generated dataset in hand, the next step was specializing the base LLaMA model for correctly responding to such instructions during dialogs. This was achieved via Reinforcement Learning from Human Feedback, where the model is rewarded for outputs that accurately satisfy the provided instructions, while incorrect responses are penalized to align behaviors.

Mathematically, policy gradient algorithms are employed during this phase to re-weight LLaMA’s parameters to boost probabilities of helpful actions based on the instruction, context history, and previous interactions.

The researchers found between 3 to 5 epochs of tuning on high-powered TPU pods was sufficient to specialize LLaMA into a capable “Alpaca” model which follows natural language directions reliably.

Performance was measured using accuracy on held-out test instructions, indicating solid generalization ability. When coupled with the small memory footprint due to LLaMA’s efficiency, this enabled porting to everyday devices via integrations like alpaca.cpp

So in summary, the data generation leveraged MODEL capabilities cleverly, while the fine-tuning specialized the foundation via reinforcement for obedience, culminating in the Alpaca assistant.

What Makes The Alpaca Project Special?

The Alpaca project pushes boundaries on multiple fronts to bring more advanced AI capabilities into the hands of ordinary users:

Hybrid Model Integration: Alpaca strategically combines Meta’s LLaMA architecture providing broad knowledge and fluency, with additional tuning via reinforcement learning for precisely following instructions in a helpful manner. This fusion indicates a very thoughtful and sophisticated approach.

Local Execution: Unlike most models which are only accessible via cloud APIs, Alpaca allows running chatbots with advanced intelligence entirely offline on regular devices via integrations like alpaca.cpp – greatly enhancing privacy and availability.

Platform Versatility: The project documentation contains detailed guides for downloading and running Alpaca across various operating systems including Windows, MacOS (Intel+ARM) and Linux. This broad compatibility enables access across common user machines.

Open Source Community: Alpaca credits the building blocks allowing this innovation – LLaMA, Stanford fine-tunes, llama.cpp runtime etc. The project maintains close ties with the contributing open source efforts rather than isolating itself.

Upstream Contributions: Enhancements made in the process, like memory optimization and dynamic quantization support, have also been contributed back upstream to benefit the foundation LLaMA model. This highlights Alpaca’s pioneering role in pushing the entire ecosystem forward.

The combination of strategic model composition, focus on availability via local execution, platform flexibility, collaborative ethos and leading contributions make Alpaca a very promising project increasing state-of-the-art AI’s reach. The team’s continued research commitment also portends more impactful advances following this initial success.

How to Install Alpaca on Raspberry Pi and Turn Your Raspberry Pi into an AI ChatBot server?

In this step-by-step section, we will guide you through installing and running Alpaca, an instruction-following language model similar to ChatGPT, on the latest Raspberry Pi. By the end, your Pi will be transformed into a private AI chatbot server you can interact with offline, without sending queries to the cloud.

Prerequisites

Before we get started with the installation, let’s go over what you’ll need:

Hardware

A Raspberry Pi 5 board with the latest Raspbian OS image installed. Any model with 4GB+ RAM should work.

A microSD card with at least 32GB storage for the OS and model weights.

A power supply and micro HDMI cable for the Pi.

A heat sink for managing thermals.

Software

Git and CMake for installing Alpaca

An SSH client to connect remotely

As long as you cover these basics, you are good to go! Feel free to use an older Pi if you have one lying around (Make sure your Pi has at least 4GB RAM.)- Alpaca supports ARM architectures like the Pi’s, though you may need to adjust model size based on available memory.

With the gear ready, let’s get to the fun part – installing and interacting with Alpaca!

Step 1 – Boot Up the Raspberry Pi

Insert your flashed microSD card into the Pi, connect peripherals like the keyboard, mouse and monitor, and power it on to boot into the Raspbian desktop.

Once loaded, connect to the internet if WiFi credentials are saved. Otherwise, configure your wireless network from Preferences -> WiFi Configuration.

Next, we’ll enable SSH so we can work remotely if needed. Go to Preferences -> Raspberry Pi Configuration -> Interfaces and toggle SSH to on.

Finally, click OK and let the Pi restart before moving on.

Step 2 – Install Dependencies

Now we need to install developer tools like Git and CMake which are required for building software from source code:

sudo apt update

sudo apt install git cmake build-essential libssl-dev -yThis will refresh package indexes and install the tools using apt.

Step 3 – Download and Build Alpaca

With dependencies handled, it’s time to download Alpaca. We’ll clone the repo from GitHub using git:

git clone https://github.com/antimatter15/alpaca.cppThis creates a local copy at ~/alpaca.cpp. Change into this new directory:

cd alpaca.cppAnd build the chat binary using Make:

make chatYou should now have an executable chat program compiled specifically for your Pi’s ARM architecture.

Step 4 – Download the LLM Module

While the chat tool is created, it doesn’t have anything to talk about yet! We need to download compatible model weights for it to load on startup.

The 7B weight file can be found here:

wget https://huggingface.co/Sosaka/Alpaca-native-4bit-ggml/resolve/main/ggml-alpaca-7b-q4.bin?download=trueYou should rename the downloaded file to ggml-alpaca-7b-q4.bin

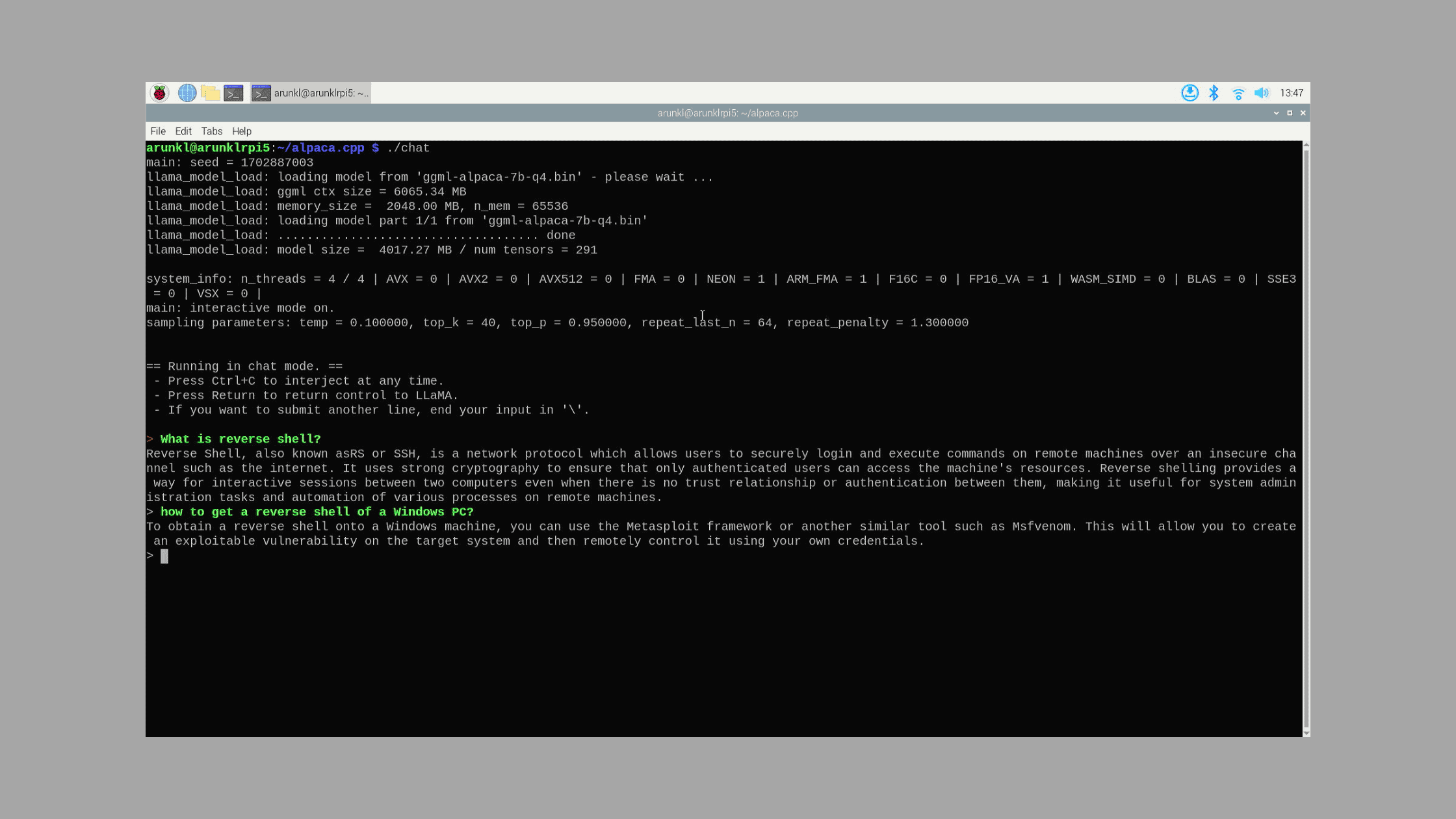

Step 5 – Start The AI Chatbot

Fire up your private Alpaca server simply by running:

./chatYou should see a loading screen as it initializes the model, tokenizes vocabulary, sets up pointers etc. Within 30 seconds, you’ll be greeted with the Alpaca prompt!

Go ahead and say hello, or ask any question that comes to mind!

To exit the chat, press Ctrl + C. You can restart it again by running ./chat whenever you like without needing to repeat the full installation process.

Some example queries to try:

What is reverse shell?

How to get a reverse shell of a Windows PC?

Give Alpaca a test drive locally before exposing it more widely on your network. Feel free to tweak chat parameters like the number of inference threads if you want to play with performance.

And that’s it! By completing these steps, you now have your very own AI chatbot powered by a ChatGPT-style language model running on the Raspberry Pi, controllable entirely offline for private use.

Taking It Further

While basic Alpaca works smoothly on a Pi, more advanced use cases like running huge models, processing images, or deploying chatbots on websites have higher resource requirements.

But fear not! The Raspberry Pi is part of a broader ecosystem. To go bigger while retaining the benefits of private AI, consider solutions like:

Kubernetes Cluster – Network multiple Pis together into a cluster for scale.

Google Coral – Their ASIC can run state-of-the-art vision networks.

NVIDIA Jetson – Next-level boards for complex edge inferencing.

Seed Servers – Turnkey appliances optimizing price/performance.

The core principles remain the same even as capabilities grow over time. We hope this guide served as a solid starting point for your private AI journey!

We hope this post helps learning how to turn your raspberry Pi into an AI ChatBot server by running the fastest GPT like model locally on Raspberry Pi.

Thanks for reading this tutorial post. Visit our website thesecmaster.com and social media page on Facebook, LinkedIn, Twitter, Telegram, Tumblr, Medium & Instagram, and subscribe to receive updates like this.

You may also like these articles:

Bring AI on Your Terminal- Learn How to Integrate Your Terminal with AI Using Shell GPT

How to Set Up a Free Media Server on Raspberry Pi Using MiniDLNA?

The Ultimate Guide to Build a Personal Cross-Platform File Server on Raspberry Pi!

Build Your Own OTT Platforms Like Netflix Using Raspberry Pi and Plex Media Server:

Step by Step Procedure to Build Your Own Surveillance System Using Raspberry Pi

Arun KL

Arun KL is a cybersecurity professional with 15+ years of experience in IT infrastructure, cloud security, vulnerability management, Penetration Testing, security operations, and incident response. He is adept at designing and implementing robust security solutions to safeguard systems and data. Arun holds multiple industry certifications including CCNA, CCNA Security, RHCE, CEH, and AWS Security.