Table of Contents

The Workflow of Microsoft Copilot for Security

This article is a continuation of the previously published article titled "What is Microsoft Copilot for Security, What Can You Do With It?". In that article, we explored the core features and use cases of Microsoft Copilot for Security, an AI-powered virtual assistant designed specifically for enhancing cybersecurity operations. This follow-up dives deeper into the technical aspects, focusing on the architecture and workflow of Microsoft Copilot for Security.

If you are new to Microsoft Copilot for Security, it is recommended to read the first article to understand its purpose and basic functionalities. In this article, we will cover the workflow of Microsoft Copilot for Security in detail, including the technology behind it, key terminologies, and the step-by-step process that enables it to assist cybersecurity professionals efficiently.

Understanding Key Terminologies

Before we delve into the workflow, it's essential to understand some of the fundamental terminologies and concepts used in the context of Microsoft Copilot for Security:

Large Language Models (LLMs): Large Language Models are a type of artificial intelligence model designed to understand, generate, and interpret natural language. These models are trained on vast amounts of textual data to enable them to answer questions, write text, and even understand context-specific queries.

User Prompts: In the context of generative AI, a user prompt refers to the input or question provided by the user to the AI model. For Microsoft Copilot for Security, user prompts might include requests such as summarizing security incidents, generating scripts, or assessing vulnerabilities.

Grounding: Grounding is a critical process in AI where the system enriches the user prompt with additional contextual information. For instance, when a prompt refers to a particular vulnerability or CVE ID, grounding ensures that the AI gathers relevant threat intelligence data before proceeding with the response.

Generative AI: Generative AI refers to a class of artificial intelligence models that generate new data based on the input it receives. This includes text generation, content creation, and interactive conversation. Microsoft Copilot for Security leverages generative AI to produce insights, summaries, and automated responses based on the security data it analyzes.

These terminologies form the backbone of the Microsoft Copilot for Security architecture, facilitating smooth interactions between the AI and security professionals.

Components of Microsoft Copilot for Security

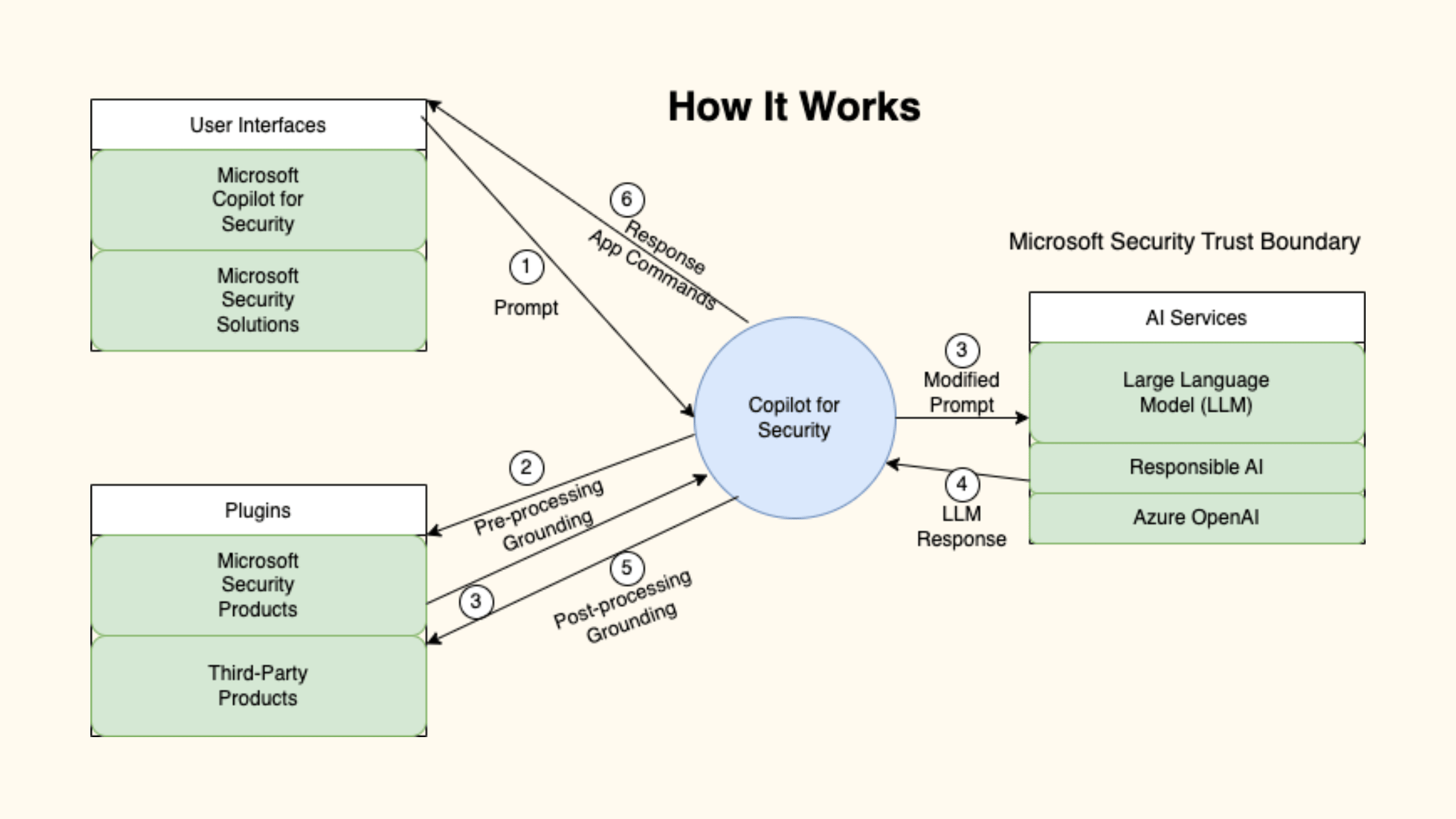

The architecture of Microsoft Copilot for Security is designed to streamline interactions between various components, ensuring efficiency, accuracy, and security. The core architecture includes the following components:

User Interface (UI): Users interact with Copilot for Security through either the dedicated portal at securitycopilot.microsoft.com or via embedded experiences within Microsoft security solutions like Microsoft Defender, Intune, Entra, and Purview. This user interface allows security professionals to submit prompts, review results, and configure settings.

Plugins: Plugins play a vital role in connecting Copilot to various Microsoft security products (e.g., Microsoft Defender, Sentinel) and third-party solutions (e.g., ServiceNow, Splunk). These plugins help Copilot pre-process prompts and post-process responses, ensuring seamless integration with existing security infrastructures.

Large Language Models (LLMs): Microsoft Copilot for Security leverages LLMs such as GPT-4 to process complex prompts, generate insights, and provide human-like responses. The LLMs analyze the input, derive patterns, and produce actionable recommendations based on the context.

Responsible AI Services: Responsible AI services are employed to check and validate AI responses, ensuring they meet ethical and compliance standards. This component is crucial for maintaining data integrity and ensuring that outputs are safe and reliable.

Azure OpenAI Service: Azure OpenAI Service acts as the underlying infrastructure that hosts and deploys the AI models. It provides the computational power and scalability needed to process large amounts of data and generate responses at machine speed.

Each of these components works in harmony to ensure that Copilot for Security delivers effective and accurate assistance in a highly secure environment. You will understand these components even better when you see the components working togather in the next section.

Workflow of Microsoft Copilot for Security

The workflow of Microsoft Copilot for Security can be broken down into several distinct steps:

Initiation: The process begins when a user submits a prompt to Copilot for Security through the UI. For instance, a security analyst might ask, "What are the top security incidents from the past month?"

Pre-processing: Upon receiving the prompt, Copilot for Security selects the appropriate plugin based on the query. For example, if the query involves recent security incidents, Copilot might engage Microsoft Defender Threat Intelligence to fetch relevant data.

Grounding: The grounding process involves refining the initial prompt to make it more context-aware. This might include referencing organizational data, threat intelligence feeds, or external databases. For example, if a vulnerability ID is mentioned in the prompt, Copilot will pull information about that specific vulnerability to enhance the response.

Large Language Model Processing: Once the prompt is grounded, it is sent to the LLM for processing. The LLM generates a response based on the grounded prompt, leveraging its training to produce a detailed, human-readable output.

Post-processing: The LLM response is then sent back to Copilot for post-processing, where plugins may be called again to format the response or add additional data. For example, if the response involves executing a script or command, Copilot ensures that it is formatted correctly and can be executed within the appropriate security context.

Responsible AI Checks: Before the response is delivered to the user, it undergoes a series of responsible AI checks to ensure it complies with organizational policies and ethical standards. This step is vital for preventing harmful or non-compliant outputs.

Response Delivery: The final response is presented to the user through the Copilot interface. If necessary, the user can request additional details, refine the prompt, or trigger automated actions based on the response.

Logging and Review: Every interaction is logged and can be reviewed later for auditing purposes. Users can see the sequence of plugins used, the grounding context applied, and the LLM's response generation process.

This workflow ensures that Microsoft Copilot for Security provides robust support for security analysts, enabling them to respond to threats quickly and accurately.

Conclusion

Microsoft Copilot for Security is revolutionizing the way organizations manage cybersecurity operations. By leveraging advanced AI technologies such as LLMs, grounding, and responsible AI, Copilot helps security teams handle incidents at machine speed and scale. Its ability to integrate seamlessly with existing security products and third-party solutions makes it a versatile tool for enhancing threat detection, vulnerability management, and overall security posture. As the cybersecurity landscape continues to evolve, tools like Microsoft Copilot for Security will be essential in maintaining a strong defense against emerging threats.

We hope this article helps you learn about the workflow of Microsoft Copilot for Security in detail, including the technology behind it, key terminologies, and the step-by-step process that enables it to assist cybersecurity professionals efficiently. Visit our website, thesecmaster.com, and our social media page on Facebook, LinkedIn, Twitter, Telegram, Tumblr, Medium, and Instagram and subscribe to receive tips like this.

You may also like these articles:

Arun KL

Arun KL is a cybersecurity professional with 15+ years of experience in IT infrastructure, cloud security, vulnerability management, Penetration Testing, security operations, and incident response. He is adept at designing and implementing robust security solutions to safeguard systems and data. Arun holds multiple industry certifications including CCNA, CCNA Security, RHCE, CEH, and AWS Security.