Table of Contents

What is Splunk? A Beginner's Guide to the Leading Log Analysis Platform

Data is often referred to as the new oil in today's digital landscape. Just like oil, data is a valuable resource that can be processed and analyzed to extract valuable insights. Data consists of various types of information, including machine-generated logs, metrics, and events from servers, applications, devices, and more. This data is given immense importance because it enables organizations to measure trends, make predictions, calculate analytics, troubleshoot issues, identify security threats and incidents, and gain a competitive edge. However, processing and analyzing the massive volumes of data generated by modern systems is an extremely challenging task without a powerful data-analytical tool like Splunk.

So, what is Splunk. The simple and short answer is, Splunk is a powerful data analysis and security platform used to analyse any type of machine date. Before diving into how Splunk can be used to analyze machine data effectively, it is crucial to understand what Splunk is, its components, architecture, uses, and capabilities. To fully grasp the concept of Splunk, it's helpful to start by learning about machine data. Let's begin this post by understanding what machine data is.

What is Machine Data?

Machine data refers to the vast amounts of information generated by various devices, systems, and applications. This data is typically unstructured and can come from diverse sources such as servers, network devices, IoT sensors, applications, and more. Machine data contains valuable insights that can help organizations monitor their infrastructure, detect anomalies, and make data-driven decisions. Examples of machine data include system logs, web server logs, application logs, and sensor data from IoT devices.

This is the actual source of information we need to ingest into any data analytical tool to get the desired output or answers to our questions. The next question is how to process or analyse the large heterogeneous machine data.

Challenges of Processing or Analyzing Machine Data

Processing and analyzing machine data presents several significant challenges for organizations. One of the primary challenges is the sheer volume of data generated by various systems, devices, and applications. The quantity of machine data can be overwhelming, making it difficult to store, process, and analyze efficiently. Additionally, machine data comes in diverse structures and formats, ranging from structured logs to unstructured text, which adds complexity to the normalization and analysis process.

Another challenge is the velocity at which machine data is generated. With the increasing number of connected devices and the growth of IoT, data is being generated at an unprecedented pace. This requires real-time processing and analysis capabilities to derive timely insights and take proactive actions. Moreover, analyzing machine data manually is an incredibly time-consuming and resource-intensive task. It demands specialized skills, tools, and significant effort to extract meaningful insights from the raw data.

This is where a powerful data analysis tool like Splunk comes into play. Splunk addresses these challenges by providing a scalable and flexible platform for collecting, indexing, and searching machine data. It can handle large volumes of data from diverse sources, automatically parse and normalize the data, and enable real-time search and analysis. Splunk's intuitive user interface and powerful search language make it easier for users to explore and visualize their data, reducing the time and resources required for manual analysis. By leveraging Splunk, organizations can overcome the challenges associated with machine data and gain valuable insights to drive informed decision-making and improve operational efficiency. So, what is Splunk.

What is Splunk?

Splunk is a powerful and versatile software platform designed to collect, index, search, and analyze machine-generated data from various sources. It enables organizations to gain valuable insights from their data, monitor their infrastructure in real-time, troubleshoot issues, and detect security incidents. Splunk can ingest data from diverse sources such as servers, applications, network devices, sensors, and IoT devices, regardless of the format or structure of the data.

One of the key strengths of Splunk is its ability to make sense of unstructured and semi-structured data. It can automatically parse and extract relevant fields from the raw data, making it searchable and analyzable. Splunk's powerful search language allows users to perform complex queries, apply filters, and create visualizations to explore and derive insights from their data. It provides a centralized platform for monitoring and analyzing data across an entire organization, enabling users to identify patterns, anomalies, and trends.

Splunk finds extensive use in various real-world scenarios.

IT Operations: Splunk can be used to monitor and troubleshoot IT infrastructure, identify performance bottlenecks, and proactively resolve issues before they impact end-users.

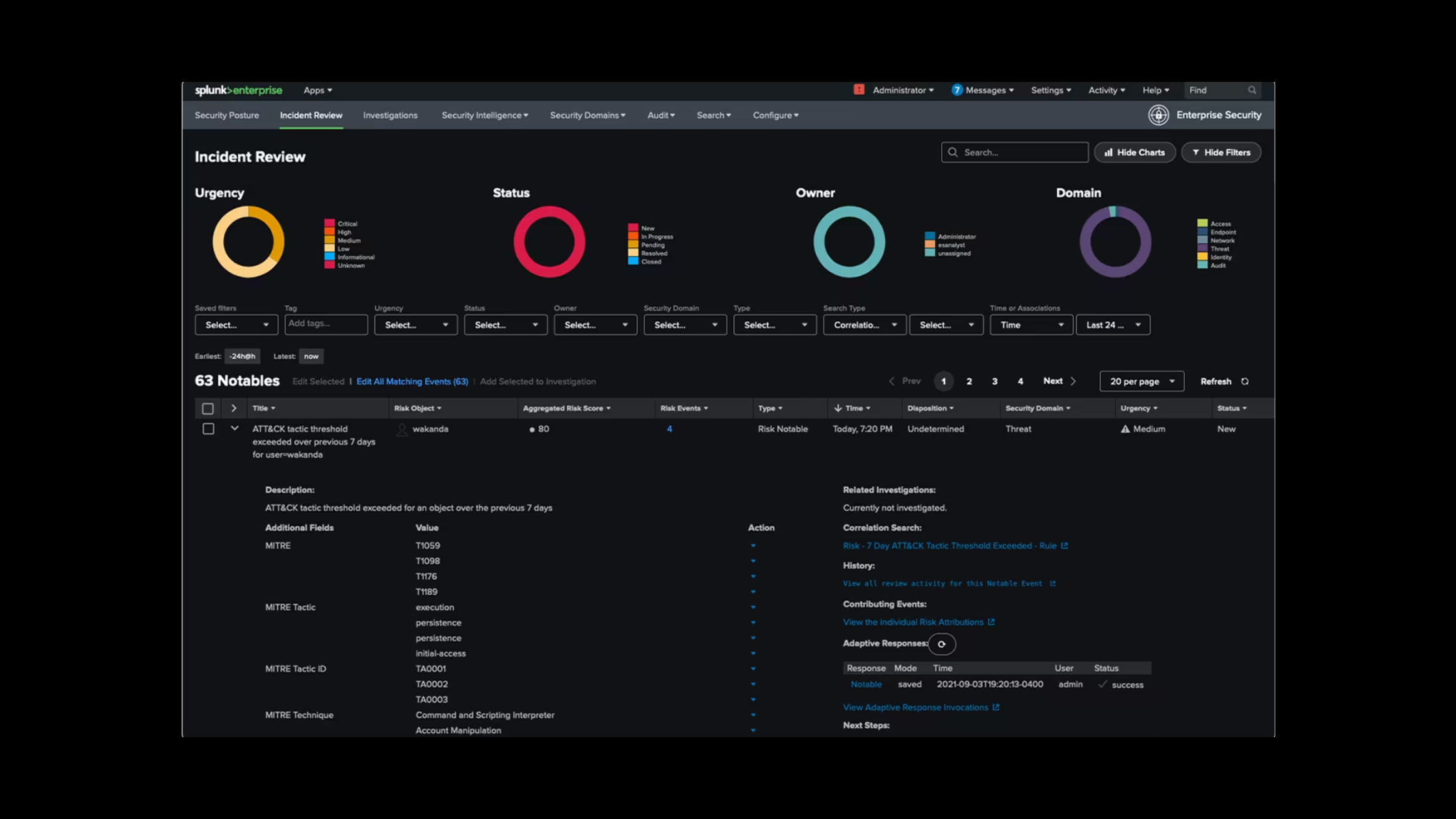

Security: Splunk can help detect and investigate security incidents, monitor user activities, and comply with various security regulations and standards.

Business Analytics: Splunk can be used to analyze customer behavior, track key performance indicators (KPIs), and gain insights into business operations to make data-driven decisions.

These are just a few examples of how Splunk is used in real-world scenarios. Its flexibility, scalability, and powerful analytics capabilities make it a valuable tool for organizations across industries, helping them unlock the value of their machine-generated data and drive business outcomes.

How Does Splunk Work?

Splunk's functionality can be divided into three key layers: data collection, data indexing, and data search.

In the data collection layer, Splunk uses lightweight agents called Forwarders to gather machine data from various sources. These Forwarders are installed on the devices or systems generating the data and forward it to Splunk Indexers for processing. They can collect data from servers, applications, network devices, and more.

Once the data reaches the Indexers, the data indexing layer comes into play. Indexers are responsible for parsing, indexing, and storing the data in a searchable format. During this process, Splunk breaks the data into events, extracts relevant fields, and creates an index that allows for fast and efficient data retrieval. The indexing process makes it possible to search and analyze the data effectively.

The data search layer is handled by Search Heads, which provide the user interface for searching, analyzing, and visualizing the indexed data. Users can perform searches, create dashboards, and generate reports using Splunk's powerful search language. Search Heads distribute the search queries to the Indexers and consolidate the results for presentation to the user.

To gain a deeper understanding of Splunk's internal functions, it's crucial to explore its components and architecture, which we will cover in the following sections.

What are Parsing and Indexing?

Before delving into Splunk's components, let's clarify two important concepts: parsing and indexing. These terms are often misunderstood and used interchangeably by beginners.

Parsing is the process of breaking down unstructured machine data into structured events and extracting relevant fields from it. Splunk uses a set of rules and algorithms to identify and extract fields such as timestamps, IP addresses, and user names from the raw data. Parsing helps normalize the data and makes it searchable.

Indexing, on the other hand, is the process of storing the parsed data in a searchable format. Splunk creates an inverted index, which maps each unique word or term to the events and fields it appears in. This index enables fast and efficient searching, allowing users to retrieve relevant events based on specific keywords or criteria.

Components of Splunk

Splunk comprises multiple components that work together to build a fully functional system. These components can be categorized into two main groups: Processing Components and Management Components.

The Processing Components handle the data and include:

Forwarders: These lightweight agents are installed on the devices or systems generating the data. They collect and forward the data to the Indexers for processing.

Indexers: Indexers receive the data from the Forwarders, parse it, index it, and store it in a searchable format. They are responsible for making the data accessible for searching and analysis.

Search Heads: Search Heads provide the user interface for searching, analyzing, and visualizing the indexed data. They distribute search queries to the Indexers and consolidate the results for presentation to the user.

The Management Components support the activities of the Processing Components and include:

Deployment Server: The Deployment Server manages the deployment and configuration of Forwarders, ensuring that they are properly set up and configured to collect and forward data.

License Master: The License Master is responsible for managing and distributing Splunk licenses to other components in the deployment.

Master Cluster Node: In a clustered environment, the Master Cluster Node manages the configuration and coordination of the Indexer cluster, ensuring data replication and distribution.

Deployer: The Deployer is responsible for distributing configuration changes and apps across the Splunk deployment, ensuring consistency and ease of management.

Monitoring Console: The Monitoring Console provides a centralized view of the health and performance of the Splunk deployment, allowing administrators to monitor and troubleshoot the system.

It's important to note that all these components come in a single installation package. The specific role of a Splunk instance is determined by its configuration. You can configure a single instance to perform multiple roles or dedicate separate instances for each component, depending on your deployment architecture. It's just a matter of configuring the package to function as the desired component.

Architecture of Splunk

Now that we have a clear understanding of Splunk's components, let's explore its architecture. Splunk's flexibility allows for different deployment architectures based on the organization's requirements.

In a standalone deployment, a single Splunk instance can be configured to perform the roles of Forwarder, Indexer, and Search Head. This architecture is suitable for small-scale environments or proof-of-concept scenarios.

For larger environments, Splunk can be deployed in a distributed architecture. In this setup, the Splunk package is configured to function as specific components, such as Forwarder, Indexer, Search Head, or a combination of them. This allows for scalability and load distribution.

For example, you can configure dedicated Forwarders to collect data from various sources, Indexers to process and store the data, and Search Heads to handle search queries and user interaction on different machines. Additionally, you can create clusters of Indexers or Search Heads to manage high data volumes and ensure high availability.

The distributed architecture enables efficient resource utilization and allows Splunk to handle large-scale deployments effectively.

Standalone and Distributed Deployments

Splunk offers flexibility in its deployment architecture, allowing organizations to choose between standalone and distributed deployments based on their requirements.

Standalone Deployments

In a standalone deployment, a single Splunk instance performs all the functions, including data input, parsing, indexing, and searching. As shown in Image 2, the standalone instance handles data collection, data processing, and search capabilities within a single unit. This deployment model is suitable for small-scale environments, testing, or proof-of-concept scenarios where the data volume and user concurrency are limited.

Distributed Deployments

On the other hand, distributed deployments are designed to handle large-scale environments with high data volume and user concurrency. In a distributed setup, as illustrated in Image 1, the Splunk architecture is divided into separate components: Forwarders, Indexers, and Search Heads.

Forwarders are deployed on the data sources and are responsible for collecting and forwarding data to the Indexers. They can be installed on various devices, such as servers, network devices, and endpoints, to gather log data from different sources.

Indexers receive the data from Forwarders, process it by parsing and indexing, and store the indexed data. Multiple Indexers can be configured in a cluster to distribute the indexing load and ensure data availability and redundancy.

Search Heads provide the user interface for searching and interacting with the indexed data. They handle search requests from users, distribute the searches to the Indexers, and consolidate the results for presentation. Multiple Search Heads can be deployed to handle high user concurrency and provide load balancing.

The distributed deployment model allows for scalability, performance, and fault tolerance. It enables organizations to handle large volumes of data, perform efficient searches, and scale horizontally by adding more Forwarders, Indexers, or Search Heads as needed.

By leveraging a distributed architecture, Splunk can effectively manage and analyze machine data in enterprise-level environments, providing the necessary scalability and performance to derive valuable insights from the data.

Combine Everything in a Single Pane

Splunk provides a unified platform for collecting, processing, and analyzing machine data from diverse sources. Its powerful search and visualization capabilities enable organizations to gain valuable insights from their data, monitor their infrastructure, and detect and investigate security incidents.

By understanding Splunk's components, architecture, and deployment options, users can effectively leverage its features to derive meaningful insights from their machine data. Splunk's ability to handle large volumes of data, support various data formats, and provide real-time analysis makes it a leading choice for log management and analysis.

Whether you're an IT operations professional, security analyst, or business user, Splunk empowers you to unlock the value of your machine data and make data-driven decisions. With its user-friendly interface, extensive app ecosystem, and powerful search language, Splunk provides a single pane of glass for monitoring, troubleshooting, and securing your environment.

To learn more about Splunk and explore its capabilities, refer to the official Splunk documentation and Splunk community for tutorials, best practices, and real-world use cases.

We hope this article helps Understand Splunk, its components, architecture, and operational workflow. We are going to end this article for now, we will cover more information about the Splunk in the up coming articles. Please keep visiting thesecmaster.com for more such technical information. Visit our social media page on Facebook, Instagram, LinkedIn, Twitter, Telegram, Tumblr, & Medium and subscribe to receive information like this.

You may also like these articles:

Arun KL

Arun KL is a cybersecurity professional with 15+ years of experience in IT infrastructure, cloud security, vulnerability management, Penetration Testing, security operations, and incident response. He is adept at designing and implementing robust security solutions to safeguard systems and data. Arun holds multiple industry certifications including CCNA, CCNA Security, RHCE, CEH, and AWS Security.