Table of Contents

How to Deploy Splunk Search Head (SH)?

A Search Head (SH) in a Splunk environment is a vital component designed to handle search requests and distribute them across the indexers. Our focus in this tutorial is to install and configure a Search Head, ensuring it is set up efficiently to manage and distribute search queries. This guide will walk you through the step-by-step process of installing the Splunk Search Head on a Linux machine, configuring it for optimal performance, and ensuring it integrates seamlessly with your Splunk deployment.

After reading this tutorial, you will be able to:

Install Splunk Search Head on a Linux machine

Configure the Search Head for efficient query distribution

Integrate the Search Head with other Splunk components, such as indexers.

Whether you're a Splunk administrator looking to optimize your deployment or a newcomer to the world of Splunk, this guide will provide you with the knowledge and skills necessary to successfully deploy and configure a Splunk Search Head.

Let's dive in and get your Splunk Search Head up and running!

What is Search Head? Why You Should Deploy Search Head?

A Search Head (SH) in Splunk is a specialized server designed to handle search requests and distribute them across the indexers in your Splunk environment. Think of it as the command center for your Splunk searches, where users interact with the data through search queries, dashboards, and reports. The Search Head processes these queries, coordinates with the indexers to retrieve the necessary data, and presents the results to the user.

When a user submits a search query, the Search Head breaks it down into smaller, more manageable chunks and distributes them to the relevant indexers. The indexers then process the search requests in parallel, returning the results to the Search Head. Finally, the Search Head merges the results and presents them to the user in a unified, easy-to-understand format.

Why You Should Deploy a Search Head?

Deploying a Search Head offers several significant advantages:

Improved Performance: By separating the search management process from the indexing process, a Search Head allows for more efficient use of resources. This separation ensures that the indexers can focus on data processing and storage, while the Search Head handles the search queries and user interactions, ultimately leading to improved overall system performance.

Scalability: As your Splunk deployment grows, a Search Head enables you to scale your search capabilities independently of your indexing capacity. You can add more indexers to handle increased data volume without impacting the Search Head's performance, and vice versa. This flexibility allows you to optimize your Splunk environment based on your specific needs and requirements.

Enhanced User Experience: A Search Head provides a centralized, user-friendly interface for interacting with your Splunk environment. Users can easily create and manage searches, build reports and dashboards, and visualize their data without needing to understand the underlying infrastructure. This abstraction layer empowers users to focus on deriving insights from their data rather than worrying about the technical details of the Splunk deployment.

Better Security and Access Control: With a dedicated Search Head, you can implement granular access controls and security measures. You can define user roles, permissions, and data access policies at the Search Head level, ensuring that users only have access to the data and features they need. This centralized security management simplifies the process of maintaining a secure and compliant Splunk environment.

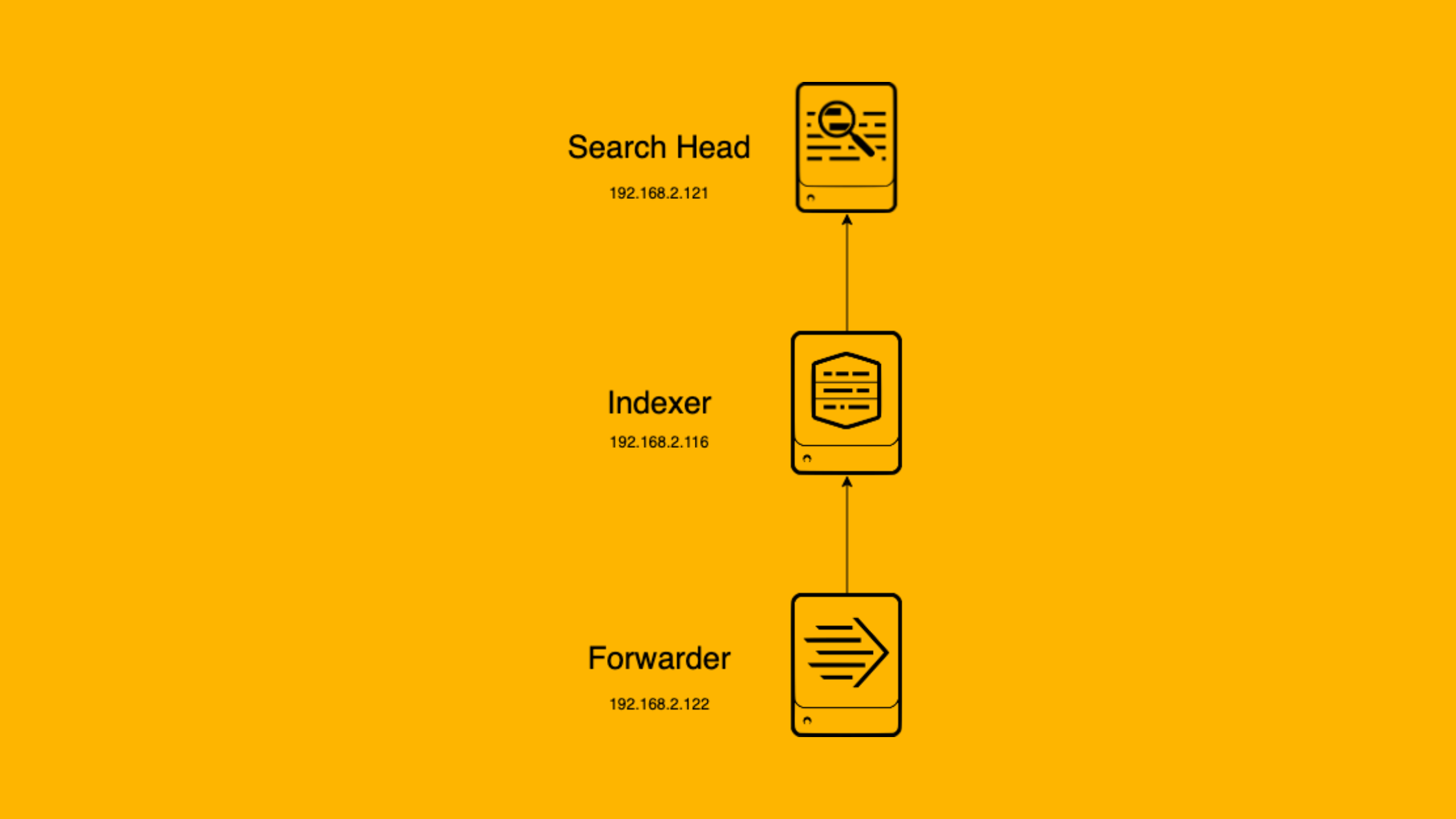

In the diagram above, you can see how the Search Head fits into the overall Splunk architecture. It communicates with various indexers to retrieve and process data, acting as the intermediary between the user's search interface and the backend data storage.

Deploying a Search Head is crucial for any large-scale Splunk deployment. It ensures that your searches are handled efficiently, your data is accessible quickly, and your users have a seamless experience interacting with the data.

Next, we will dive into the step-by-step process of installing and configuring a Search Head on a Windows machine.

Lab Setup

To better understand the process of deploying a Splunk Search Head, let's walk through a simple lab setup. Our lab consists of three machines: a Windows machine, a Mac, and a Linux machine. Each of these machines plays a specific role in our Splunk environment.

Windows Machine (Log Source): The Windows machine serves as our log source. We have deployed a Splunk Universal Forwarder on this machine to collect and forward logs to the Indexer.

Mac (Indexer with Search Head): On the Mac, we have set up a Splunk Indexer with a Search Head. The Indexer receives and indexes the logs forwarded by the Universal Forwarder on the Windows machine. The Search Head on the Mac allows users to search and analyze the indexed data.

Linux Machine (Search Head): In this tutorial, our focus will be on installing a Splunk Search Head on the Linux machine. We will configure this Search Head to communicate with the Indexer on the Mac, establishing a distributed search environment.

Here's a visual representation of our lab setup:

By deploying a Search Head on the Linux machine and configuring it to work with the Indexer on the Mac, we will create a distributed search environment. This setup allows for better resource utilization and improved performance, as the search processing is offloaded from the Indexer to the dedicated Search Head.

In the next section, we will dive into the step-by-step process of installing and configuring the Splunk Search Head on the Linux machine, ensuring seamless integration with the existing Indexer on the Mac.

Configuration Overview

Before we dive into the step-by-step process of setting up the Search Head and configuring the Indexer as a Search Peer, let's take a moment to overview the configurations we'll be performing on the Search Head.

Download and Install Splunk Enterprise: On the Linux machine, we will download and install a full instance of Splunk Enterprise. This will provide us with the necessary components to set up the Search Head.

Enable Distributed Search: To establish a distributed search environment, we need to ensure that the distributed search feature is enabled on both the Indexer (Mac) and the Search Head (Linux) instances. Enabling distributed search allows the Search Head to communicate with the Indexer and distribute search tasks efficiently.

Add Indexer as a Search Peer: With distributed search enabled, we will add the Indexer (Mac) as a Search Peer in the Search Head (Linux). This configuration allows the Search Head to send search requests to the Indexer and retrieve the relevant results.

By completing these configurations, we establish a distributed search environment where the Search Head can efficiently communicate with the Indexer and distribute search tasks.

Step-by-Step Guide: Deploying Splunk Search Head

In this section, we will walk you through the process of deploying a Splunk Search Head (SH) on a Linux machine using a .tgz file. We will also cover enabling distributed search and adding the Indexer as a search peer. Follow these steps to get your Search Head up and running:

Step 1: Install Splunk Enterprise on the Linux Machine

1. Open a terminal and navigate to the directory where you downloaded the .tgz file.

2. Download the Splunk Enterprise .tgz file from the official Splunk website.

3. Extract the .tgz file using the following command: Replace <version> and <build> with the appropriate version and build numbers of the downloaded file.

4. Navigate to the extracted Splunk directory:

5. Accept the license agreement and provide a username and password for the Splunk admin user running this command.

6. Start the Splunk service and start accessing the Splunk Web UI by opening a web browser and navigating to http://<linux-machine-ip>:8000.

Step 2: Enable Distributed Search

Log in to the Splunk Web UI on both the Indexer (Mac) and the Search Head (Linux) using the admin credentials. Ensure the Distributed Search is enabled which is enabled by default.

On the Indexer and Search Head: Go to "Settings" > "Distributed Search" > "Distributed Search setup" > "Turn on Distributed Search" > "Save".

Step 3: Add the Indexer as a Search Peer

1. On the Search Head, navigate to "Settings" > "Distributed Search" > "Search Peers".

2. Click on "New" to add a new search peer.

3. Enter the following details:

- "Host": The IP address or hostname of the Indexer.

- "Management Port": The management port of the Indexer (default: 8089).

- "Splunk Username" and "Splunk Password": The credentials for accessing the Indexer.

4. Click on "Save" to add the Indexer as a search peer.

After a couple of minutes, Replication status changes to Successful.

Start searching for events in the Search head. That's it.

We hope this article helps in deploying a Search Head and adding the Indexer as Search Peer.

That's all for now, we will cover more informative topic about the Splunk in the up coming articles. Please keep visiting thesecmaster.com for more such technical information. Visit our social media page on Facebook, Instagram, LinkedIn, Twitter, Telegram, Tumblr, & Medium and subscribe to receive information like this.

You may also like these articles:

Arun KL

Arun KL is a cybersecurity professional with 15+ years of experience in IT infrastructure, cloud security, vulnerability management, Penetration Testing, security operations, and incident response. He is adept at designing and implementing robust security solutions to safeguard systems and data. Arun holds multiple industry certifications including CCNA, CCNA Security, RHCE, CEH, and AWS Security.